Introduction

The days where all applications ran from a couple of servers managed by a couple of administrators hidden away in the basement of an office building are coming to an end.

Although a big chuck of traditional applications will continue to need traditional servers to run from, it cannot be ignored that containers have been changing the application landscape. More and more applications are running from containers in a distributed architecture. Because the business expects applications to be delivered when they are needed, not in 3 months from now. Agility drives business and this is no different for the applications that the business relies on, no one wants to wait for an extended provisioning time for setting up new application servers.

The three pillars

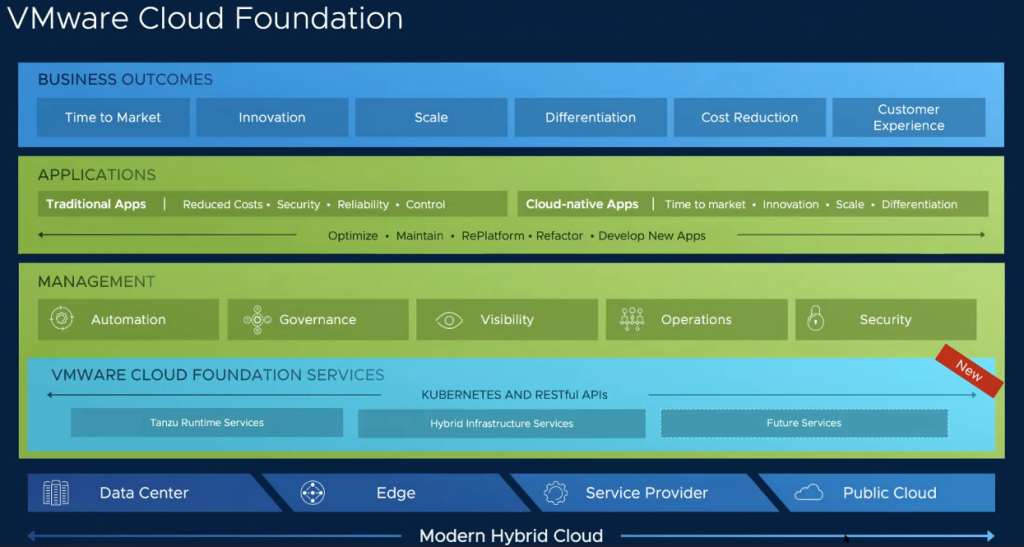

To support this need from businesses, VMware is releasing some essential updates to its portfolio and as such, vSphere 7.0 has been developed on top of 3 key pillars:

– Streamlining development: VCF 4 which comes with vSphere 7 will support Kubernetes.

– Operational agility: Application focused and a simplified life-cycle management.

– Accelerating innovation: Cost efficiency and a predictable quality of service for applications

Project Pacific

Better ways to streamline the development process and to bring agility into operations are goals every business has, so they can accelerate the innovation at the speed at which new needs and trends emerge.

To do this VMware has unified two technologies together in Project Pacific: Kubernetes and vSphere.

However, to be able to use Pacific, you will need a few foundations into place. NSX-T for networking, vSAN for storage, vSphere 7 as a hypervisor and so one. This makes VCF the ideal platform to run Pacific from. At the time of this writing, VCF 4 is a hard requirement. Hopefully VMware will loosen up some what for these requirements.

That said, VCF 4 does bring the opportunity to be able to run containerized workloads directly from the well known and trusted vSphere platform and at the same time offer the developers access to the APIs of the full stack VCF platform: Not just Kubernetes but also the infrastructure, compute, storage, networking and management are accessible through the API.

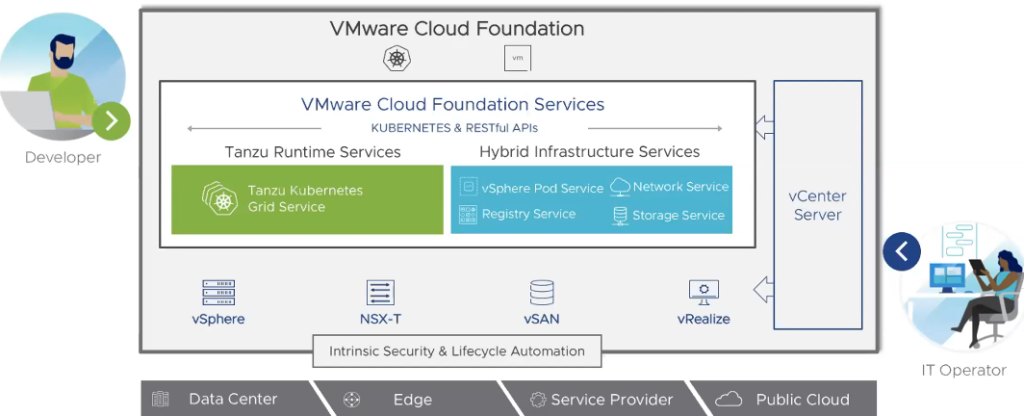

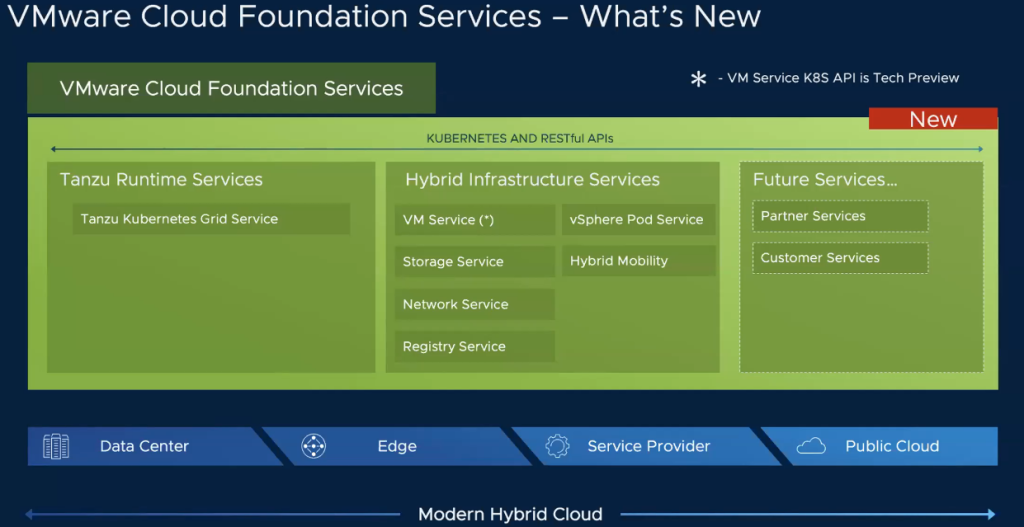

Pacific does so by exposing a new layer of services : The VMware Cloud Foundation services. Over here you will find the Tanzu Runtime Services, the Hybrid Infrastructure Services and the “future” Services. You might recognize these services as the first manifestation of Project Pacific.

By offering these services to the developers, they can now consume anything VCF has to offer, such as Kubernetes clusters and the underlying platform, to the developer. So Pacific doesn’t just bring Tanzu to the developers, but it also exposes the Hybrid Infrastructure Services to the developers. The things the developers need to support the applications container image registries.

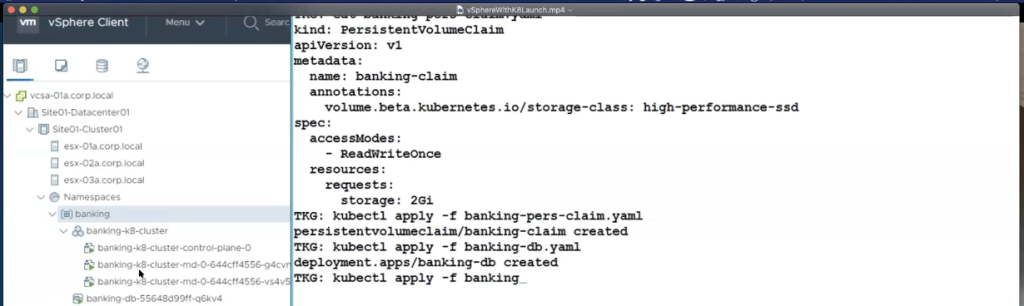

When you superimpose the view the IT administrator sees over the view of the Developer, you can see that they both can see the same environment.

Where the developer can see the namespace from his console, the administrator can see exactly the same namespace (K8s cluster which equals to for example a specific banking application) from the UI in vSphere.

Of course, all the advantages that vSphere brings, are available to the Kubernetes Namespaces as well. Things such as HA and DRS.

This brings us to VCF 4 itself.

VCF 4

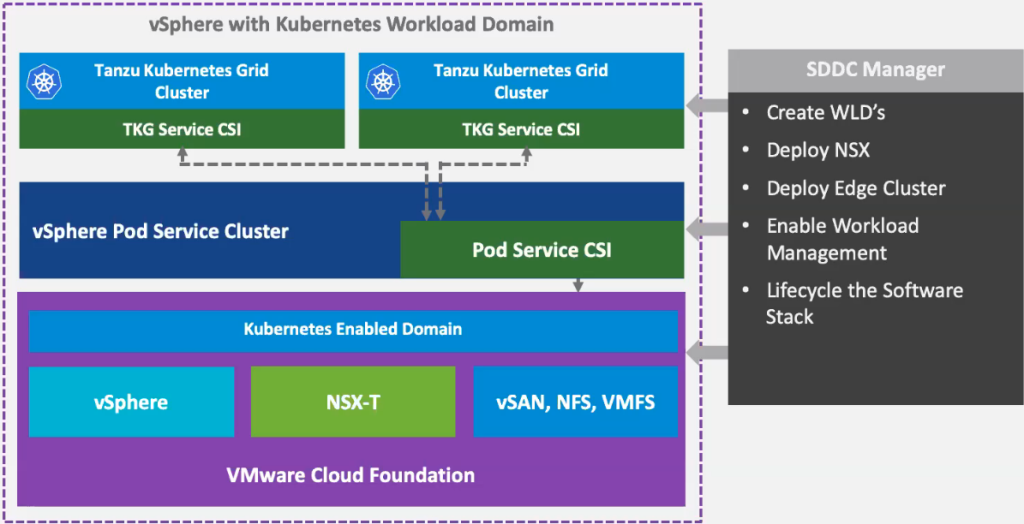

SDDC Manager still is responsible for creating and deploying the Workload Domains. However, unique to the integration of vSphere with K8s is that we can now leverage specific profiles for the Deploy Edge Cluster to make sure that the edges match the requirements for the K8s clusters.

Also new is that Lifecycle Management doesn’t only apply to the software stack, but also to the K8s runtime.

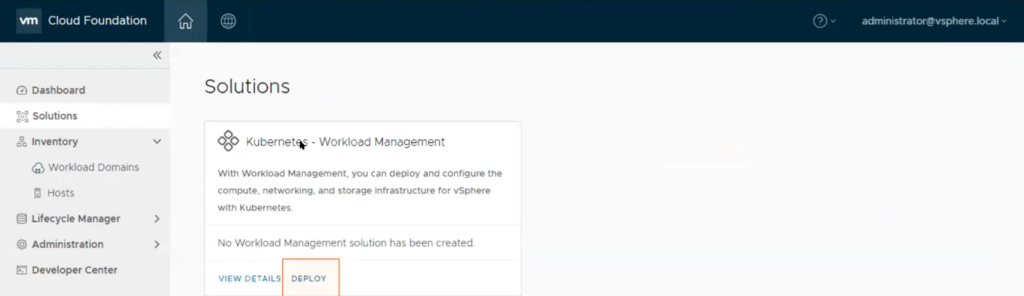

Kubernetes Support in VCF 4

One of the exiting new solutions in VCF is that from the SDDC Manager, you can now deploy a Kubernetes Workload. Bear in mind that to do so, you will need the required license keys, you will need NSX-T Edge cluster and you must deploy this is a workload domain. You cannot deploy it in a management domain!

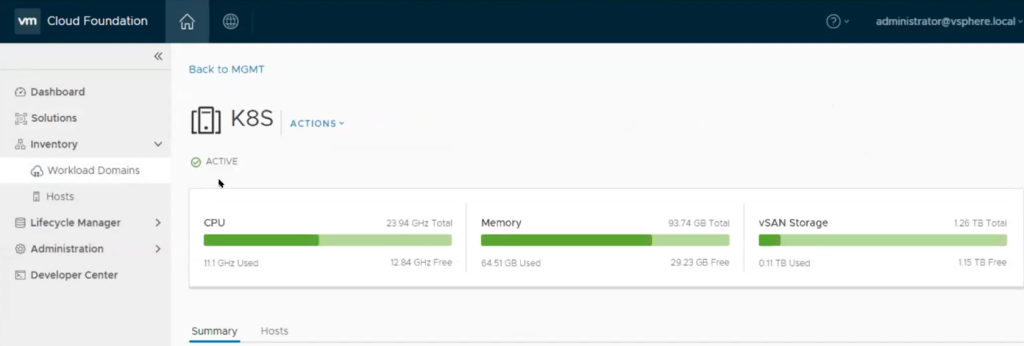

Once the deployment is finished, you can admire your first deployed K8s cluster on top of VCF from the SDDC Inventory.

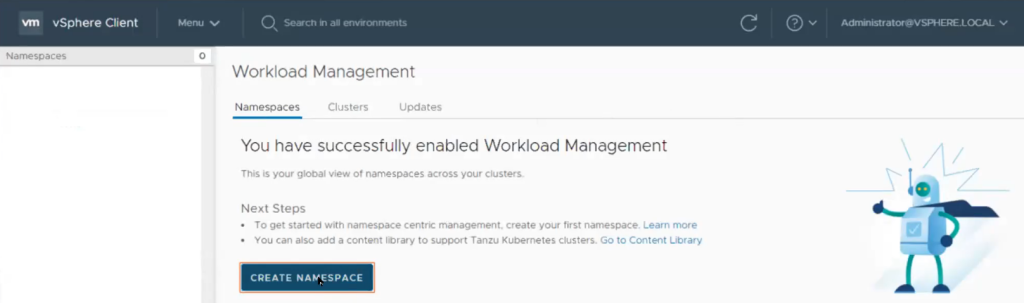

Once this is complete, you can create a K8s namespace from the vSphere client. How cool is that?

Storage in VCF 4

VCF 4 support only vSAN in the management domain

In the Workload Domain vSAN itself is of course also supported as primary storage but you can add VMFS of FC, iSCSI on vSAN and also NFS as a supplemental store system next to vSAN itself. Keep in mind that you have to add it as a day 2 operation. The support for vVOLs is not there yet, but keep your eyes and ears open, it might be there once VCF 4 ships out!

VCF 4 Federation or Multi-Instance Management

As of VCF 4 you will be able to view and manage multiple workload domains which have been deployed cross multiple Cloud Foundation Instances from a single deployment of SDDC Manager.

Additionally, the artist formally known as Identity Manager and as of VCF 4 re-branded to Workspace One Access, will privide the integration with AD and LDAP across NSX-T and the vRealize Suite.

vCF 4 Security updates.

As of VCF 4 there will be a new role: the Operator role. It comes without the capabilities for password management, backup/restore and User management. These ill solely be accessible for the Admin role.

PSC and AD integration will be available as of now.

Also expect that public API access will now be token based authentication on

What is new with vCenter server

vCenter Upgrade

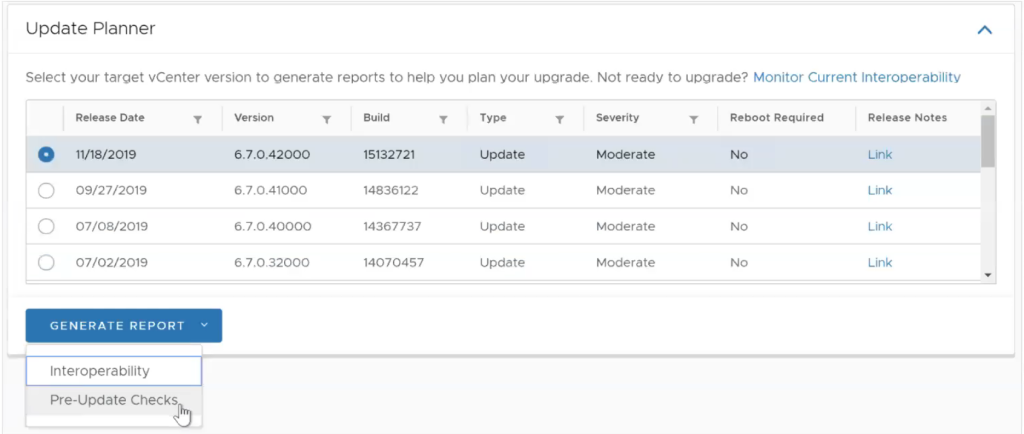

vCenter server 7 comes with the vCenter Server Update Planner which will do the heavy lifting for you and provides the tooling needed to plan for your upgrade. It is available form the UI. Actions such as pre-update checklists, what-if workflows can all be run natively from the UI now.

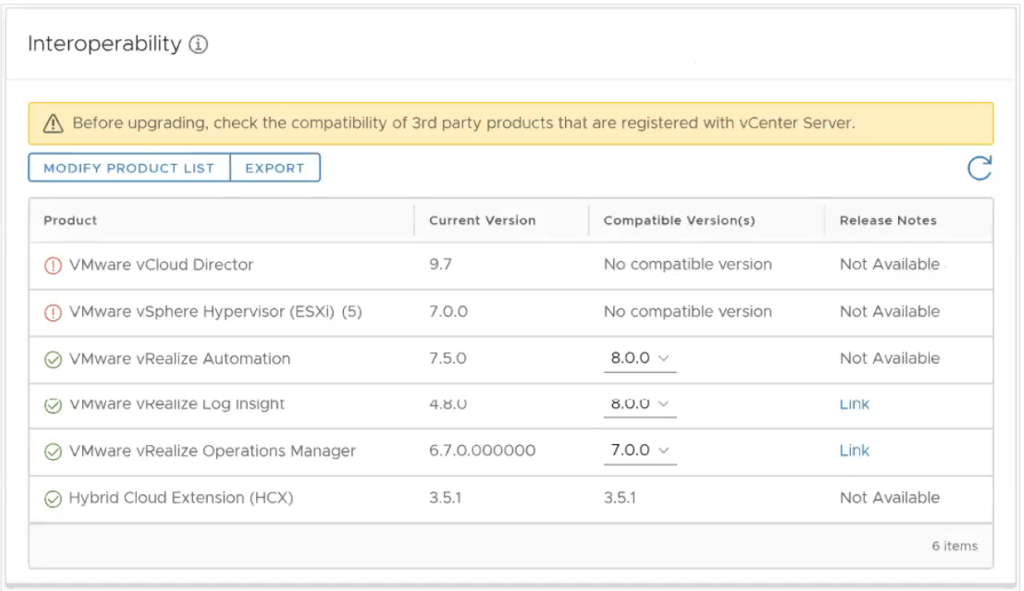

One of the most tedious actions to perform probably was figuring out if the interoperability would still be supported after upgrading vCenter server. Worry no more, vCenter 7 has you covered:

The end of the External Platform Services Controller is here but to make things easy for you, the upgrade of vCenter server and the converging of the PSC will happen at the same time, reducing the time needed to migrate the vCenter server 7 services.

So when you are looking for the convergence tool in the vCenter server 7 ISO file, you don’t have to anymore. Convergence of the external PSC happens automatically in the background.

vCenter Multi-homing

After much requests, vCenter now allows for multi homing and will support up to 4 NICs. However, the first NIC must be reserved for HA, and should not be changed at all.

vCenter 7 maximums

Stand Alone vCenter

- Host per vCenter = 2500

- Powered-on VMs = 30000

Linked Mode

- vCenters per SSO = 15

- Hosts = 15k

- Powered-on VMs = 150000

vCenter Latency

- vCenter to vCenter = 150 ms

- vCenter to ESXi = 150 ms

- vSphere client to vCenter = 100 ms

Lifecycle management: vCenter Server Profiles

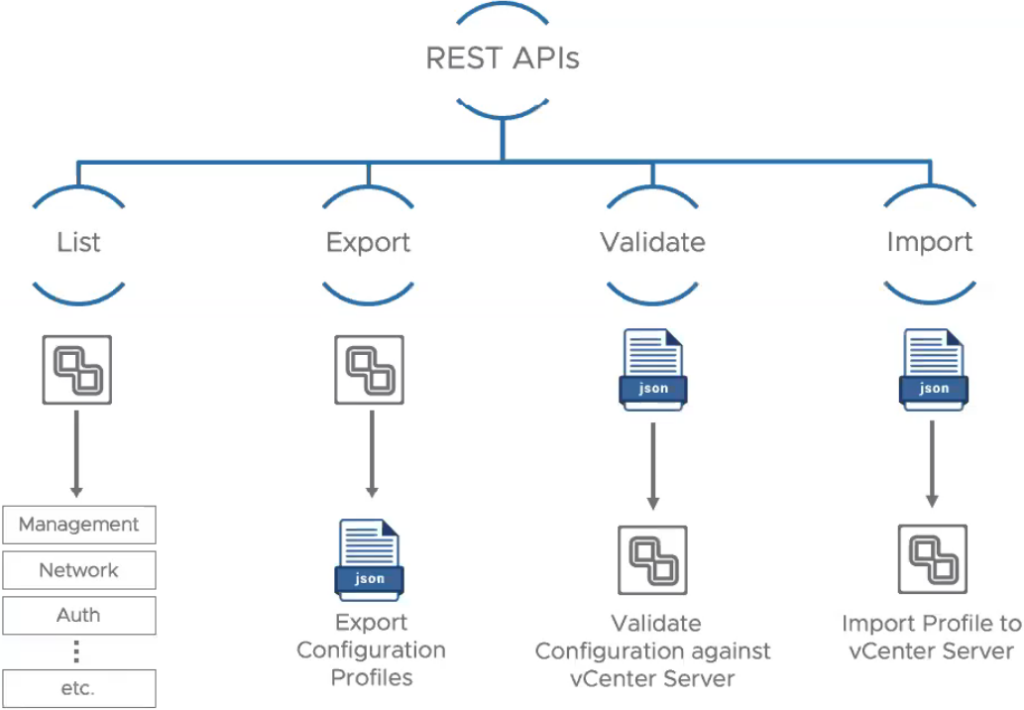

Love them or hate them, we all know the host profiles. But coming with the vSphere 7 release there is a new kid on the block. vCenter server profiles. These will allow you to baseline your vCenter server export the configuration of it in the form of a JSON file and import it into other vCenter servers. Kind of like applying a desired state across vCenter servers.

The advantage in using vCenter server profiles is that one is easily able to maintain version control between vCenter servers and also easily revert to the last known good configuration in case of a mistake.

To manage vCenter server profiles you will need to make use of the API. That’s right, these will not be available from the UI.

Lifecycle management: Cluster Image management

We have talked about the vCenter server lifecycle management, but what about the cluster level, is there anything new there as well?

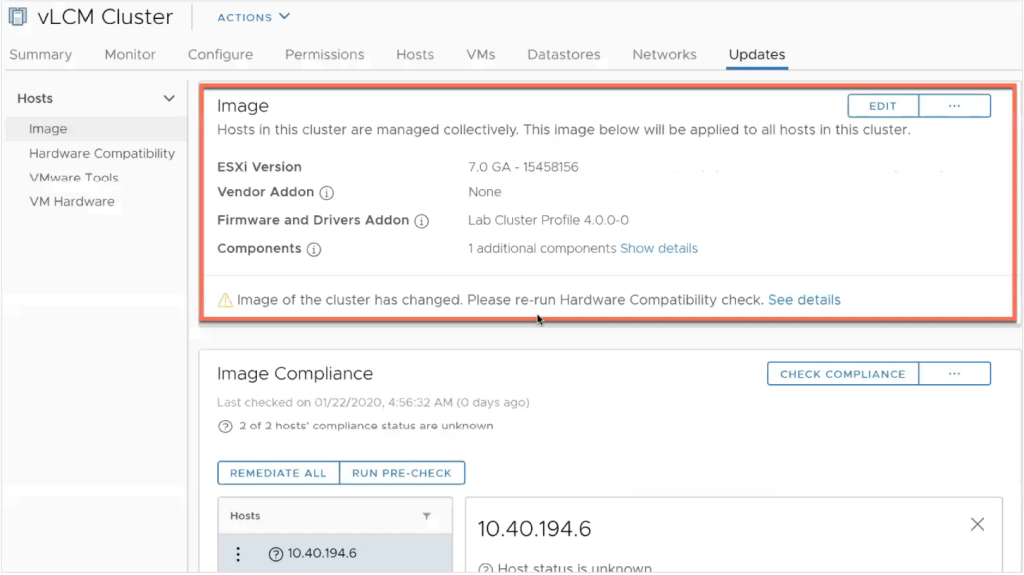

Well, yes there is! After the host profiles, vCenter server profiles, VMware now brings you the Cluster Image Management.

The Cluster Image Management is not related to the host profiles, instead, it will allow you to plan and look after the ESXI Lifecycle management. What do we mean by this?

Like with the vCenter Server Profiles, the Cluster Image Management will provide a way to implement a Desired State Model but now across your ESXi hosts on a cluster level.

Lifecycle management: vLCM

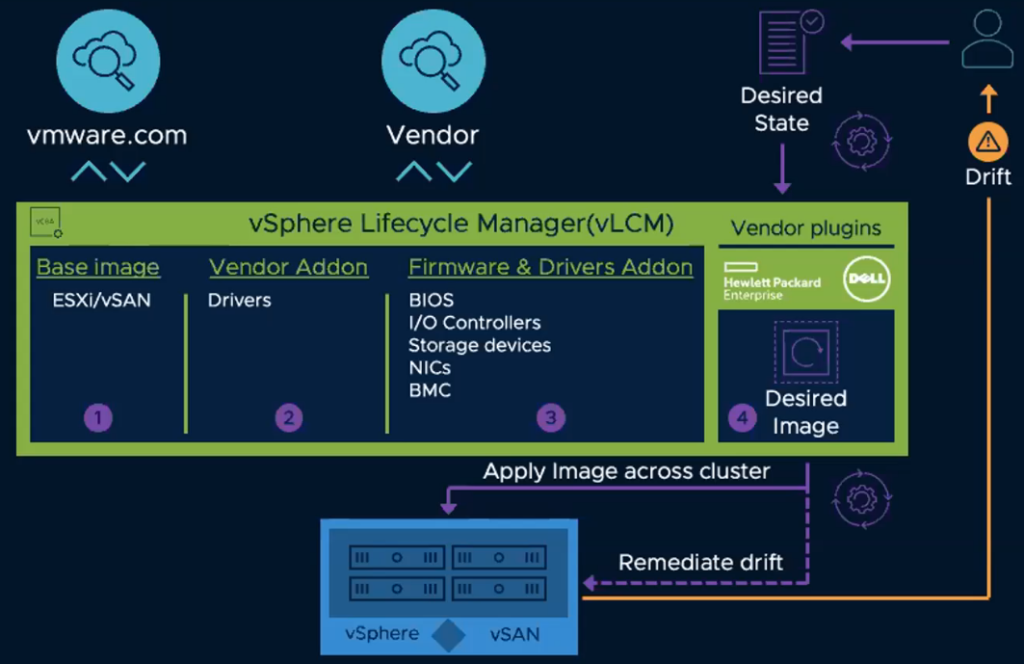

When you look up to the picture above, you will spot that the new ‘Update Manager’ is now called vLCM (vSphere Lifecycle manager) , which is of course spawned of from the vRealize Lifecycle Manager.

As said above, it is desired state driven and functions on the cluster level. It allows you to track the hypervisor version, firmware & drivers and to re-mediate hosts in a cluster all at once.

Yes, this applies to vSAN clusters and hosts as well and will simplify keeping your vSAN clusters healthy and updated accordingly.

Another really cool thing with vSphere 7 is that it enables you to manage firmware from within the vSphere UI. Yes, this also means that you are able to remove ESXi firmware now from the UI, but also from the API.

Cluster Performance

Improved DRS

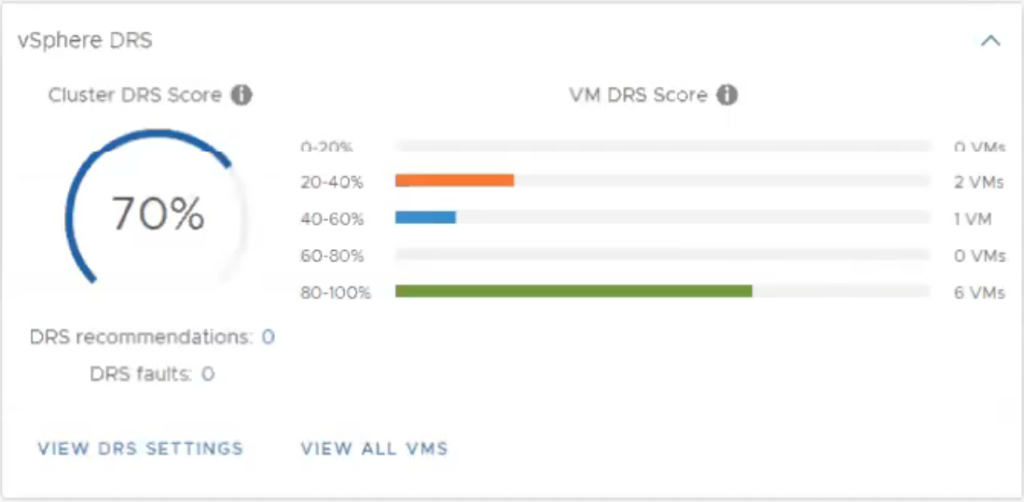

Unlike DRS in all previous releases, where DRS was rsther cluster centric and calculated each 5 minutes, the improved DRS is workload centric and is calculated each minute.

So how does this work?

DRS now calculates a VM DRS score for each VM on an ESXi host in a cluster. And the DRS scores are grouped into buckets. Now, if a bucket score for a VM is lower on another host, a DRS migration might be considered.

The DRS bucket score is calculated by looking at the following metrics:

- CPU Ready (%)

- Memory SWAP

- CPU Cache behavior

- Headroom for workload to burst

- Migration cost

Take a close look at the ‘Headroom for workload to burst’. This means that a VM will not be moved to another host, if that host can not guarantee that the workload you are trying to move, has no headroom to burst on that host.

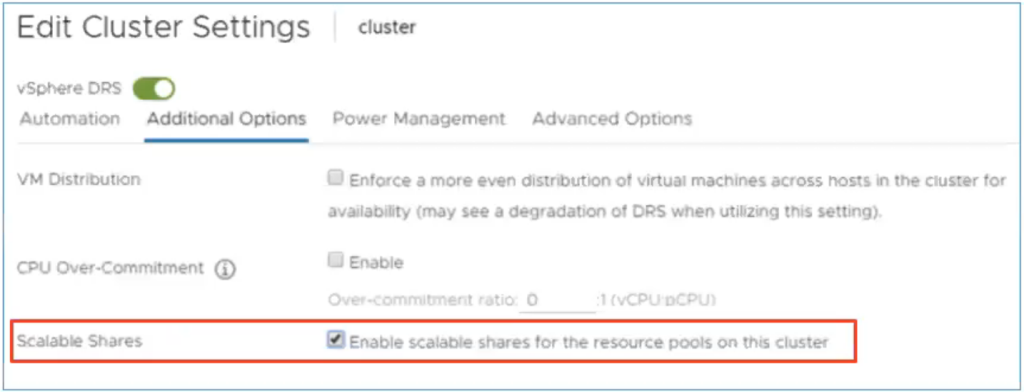

Also new are the DRS scalable shares. Scalable shares will enforce the prioritization of VMs with a higher entitlement, to actually get more resources than other VMs which might be entitled to lower shares. The DRS Scalable shares are also change dynamically depending on the number of VMs. Gone are the days where a VM with normal entitlements could usurp as many resources as a VM with higher resource entitlements.

Take note that DRS scalable shares are not enabled by default!

Assignable hardware

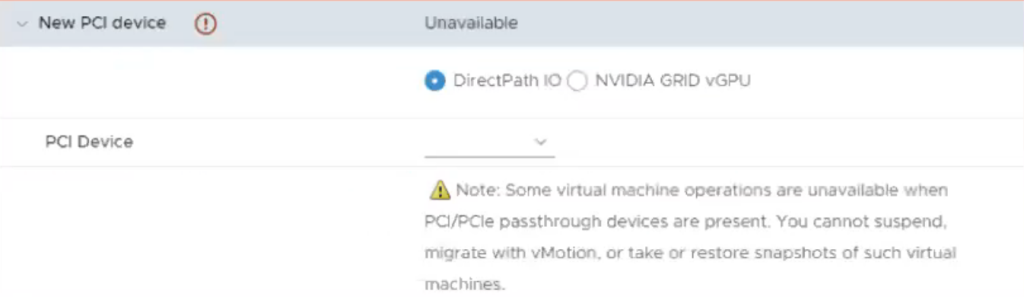

Prior to vSphere 7, when hardware was assigned to a VM, for example DirectPath IO, this VM was literally assigned to a specific host, and HA couldn’t move this VM to another host in case of emergency.

With vSphere 7 we bring some of this goodness back to the table: Support for initial placement and support for dynamic DirectPath IO enabled VMs.

In case of NVIDIA GRID vGPUs, specific profiles can be assigned to VMs, which will bring support for initial placement and support for dynamic DirectPath IO enabled VMs.

vMotion improvements

vSphere 7 brings a reduced STUN time, the time where a VM is basically halted so that the active memory pages can be copied to new new host during the vMotion.

Where prior to vSphere 7 a vMotion of a VM with 24 TB of memory coupld take up to 2 seconds, it now will take but milliseconds.

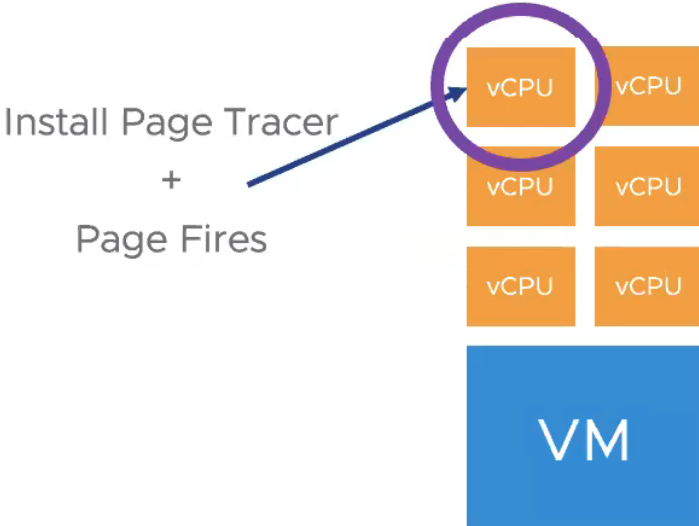

How you ask? in vSphere 7 only a single vCPU is claimed to install the page tracer on and instead of transferring the whole bitmap during switch-over, only the compacted bitmap is transferred.

Security updates

Intel Software Guard Extensions (SGX)

Intel Software Guard Extensions (SGX) allow applications, to work with the hardware layer, to create a secure enclave which cannot be viewed by the guest OS or Hypervisor.

Of course this applies to Intel only.

But this also has some repercussions. Being able to hide things from the underlying OS and hypervisor also means that services which depend on the hypervisor will not work anymore for these VM’s. Just think about vMotion and snapshots.

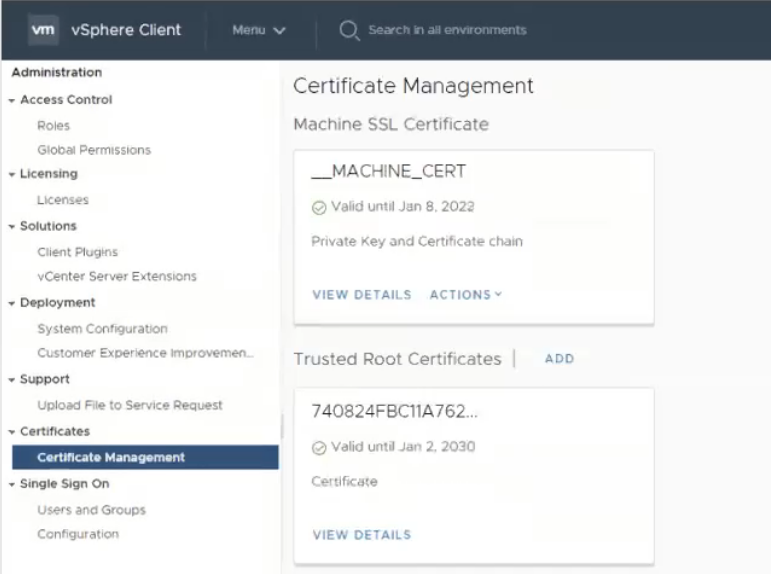

Certificate management

Did you also get fed up with the certificate management in vSphere 6? I know, it is a pain to manage.

vSphere 7 will bring some major improvements here. Certificates have been reduced. Yes, reduced that much!

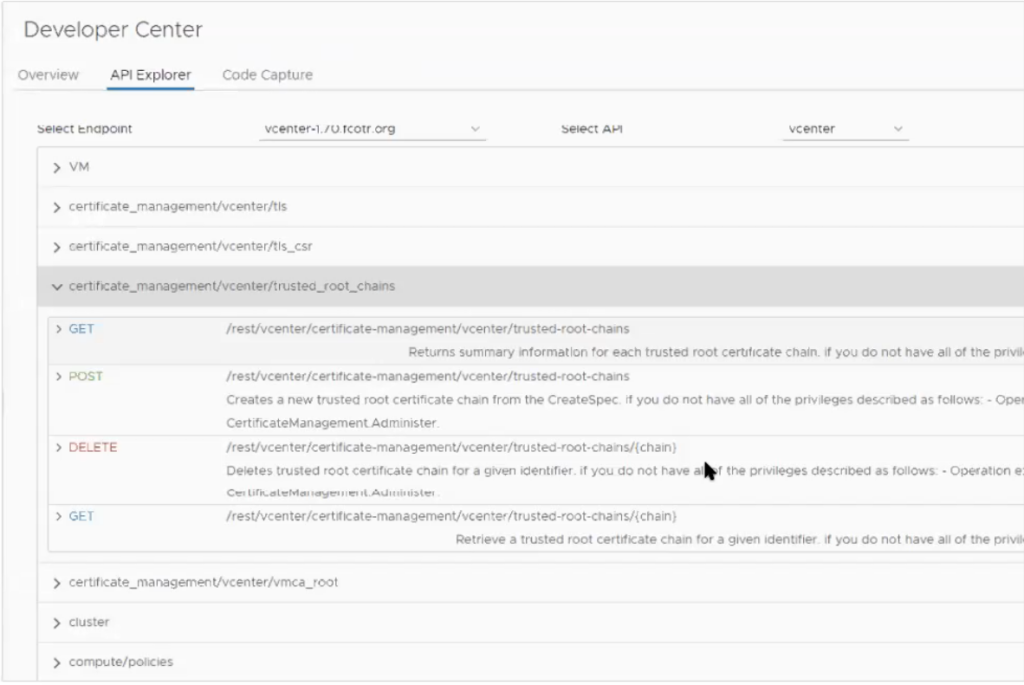

API’s can be used to manage the certificates in vCenter now.

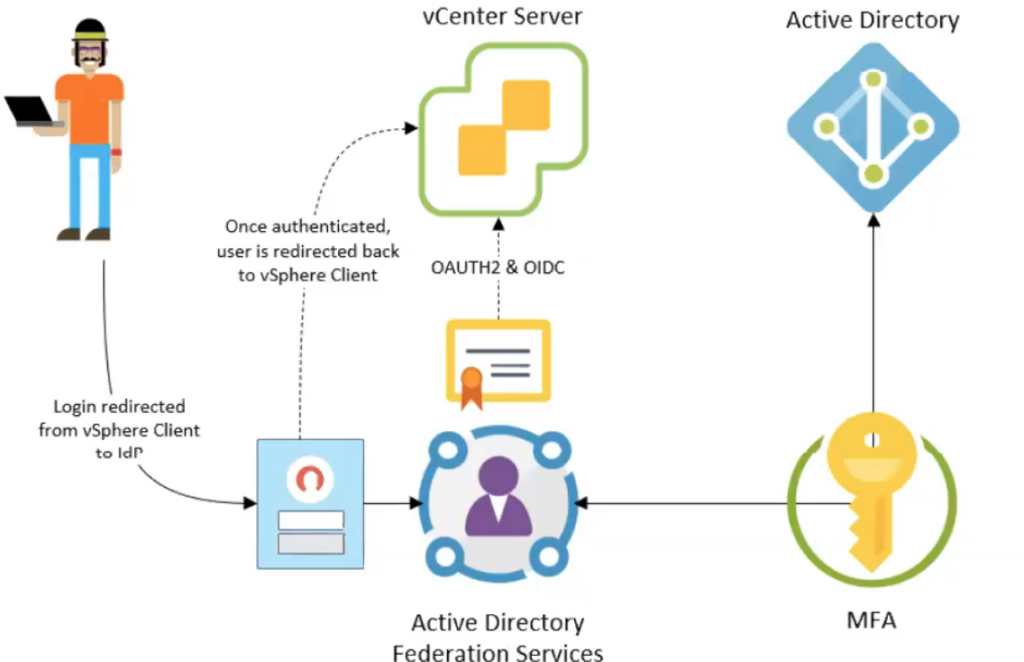

Identity Federation

Build on OAUTH2.0 and OIDC, it will allow for redirection for authentication in vCenter server. Making audits less of a pain. However, itt works only with ADFS for now.

Does this mean that SSO is out of the window? No, it still exists. you can still choice to go for AD LDAP integration, but realize that OAUTH2.0 with ADFS is a possibility too now.

Take note that Identity Federation only works for vCenter server at the moment.

But the good news is that Federated Identity will work with Kubernetes which comes with VCF and Tanzu!

vSAN 7

You have already found out now about the new and improved way VMware will allow you to apply lifecycle management to your hosts and clusters. But what else is new for vSAN?

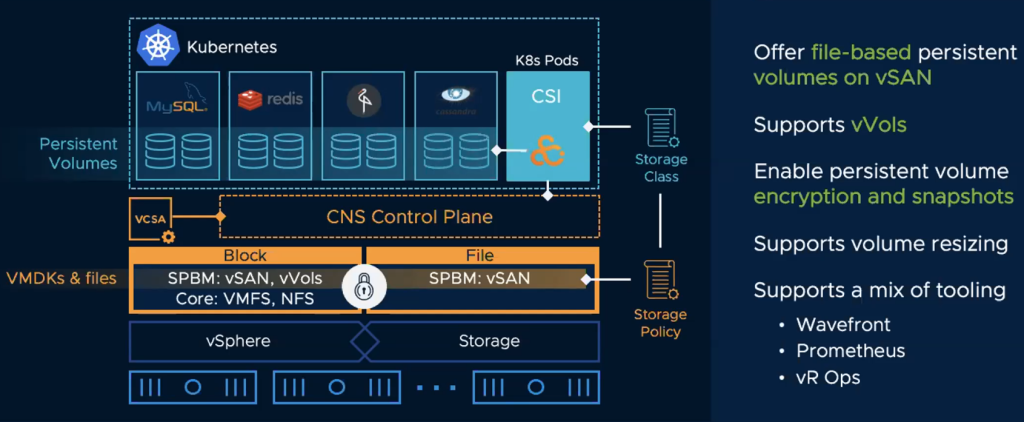

File Services

At the end of 2019, I was part of the Beta team who tested the file services, and keeping this a secret was very hard because I have been looking forward to the support for builtin file services for so long!

So what are they?

Well it is built in into the hypervisor and will be managed by vSAN and can be configured from the vCenter UI. The file services will support several usecases such as cloud native and traditional workloads.

For example: the vSAN file services will be able to support the persistent volume requirements of the cloud native apps, but also provide file shares for users.

But bear in mind though that these new file services have not been created to replace large scale file systems, read the product documentation and use common sense.

The file services will support both NFS 3 and 4.1.

If you are wondering if these file services can be encrypted, yes they can. vSAN is able to encrypt these with data at rest encryption.

To monitor these file services you can use Wavefront, Prometheus and the all time on premises favorite: vROps.

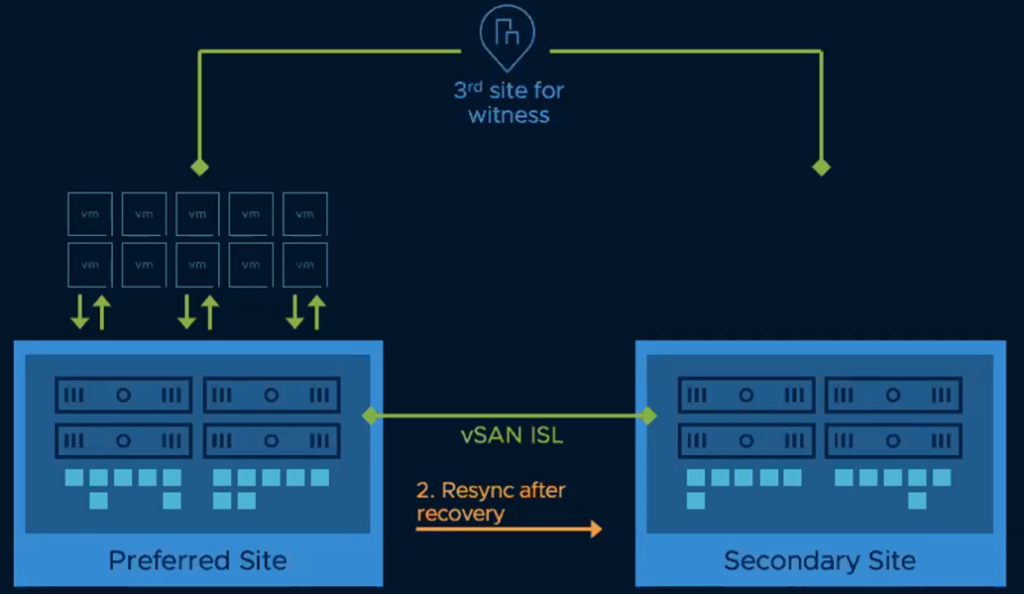

Stretched clusters and 2-node topologies

The deployment of stretched clusters and 2-node clusters which make use of a routed network, has been simplified by providing a default gateway override from the UI so there is no need anymore to set a static route on the ESXi host.

Also, it will be easier to track misconfiguration.

Also DRS will now be aware when you are running a stretched cluster and will use intelligent placement for your VMs within your stretched cluster.

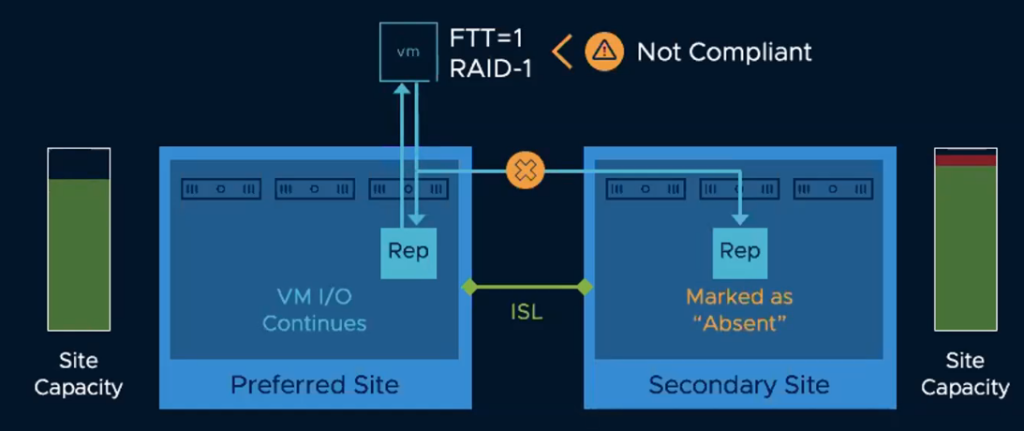

Let’s say there is an outage on the ISL (Inter Site Link), how do vSAN and DRS now orchestrate the VM placement, resync and recovery?

HA would kick in and restart some VMs in the preferred site of course. But in case the ISL comes backup, before the whole migration has completed, vSAN will now do something differently from the way it used to.

In case of resyncs ater the recovery has started, DRS will not migrate VMs back to the other site until the resync is complete. This will of course reduce the ISL traffic which would normally take place, will increase the VM read performance and reduce the ISL inefficiencies.

A big improvement has also been made for the witness host. With vSAN 7, in case you would have to replace the witness host, a’replace witness’ workflow will now make sure that the cluster now immediately knows the witness has been replaced and a resync will be started promptly.

Capacity management wise, the stretched clusters will now prevent a capacity imbalance. In case a site experiences some high capacity utilization and a VM is constrained, vSAN will mark the VM components in the stretched cluster as absent and the VM will now process the i/o from the other site!

Operations and Management Enhancements

vSAN 7 brings improvements for VM capacity reporting. It reduces capacity confusion by accurately displaying the effective usage for the capacity of thin provisioned VMs, swap objects and namespace objects, in the UI as well through the API.

The UI will now also display how much memory the vSAN services have been using over time and which can be tracked on a per host basis. So you won’t have to speculate anymore.

The visibility for vSphere replicated data has been improved as well. You can now easily see which objects are created and used by vSphere Replication

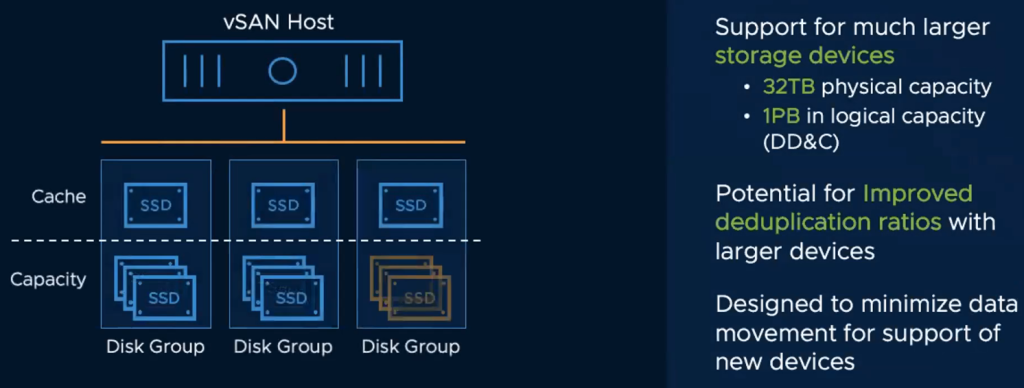

Support for much larger capacity devices is here as well now. I will let the following picture speak for itself:

Did you notice something isn’t mentioned here? You are right, if you are wondering if the cache capacity size for all flash vSAN has been changed, it has not. The same capacity limitation still applies

vROps 8.1

vROPs 8.1 is bringing a lot of most welcome new features:

- The choice between an on premises version and SAAS version of vROps 8.1!

- A deeper integration between vROps and vRA.

- More efficient capacity and cost management, including better integration of cloud services through the integration of CloudHealth.

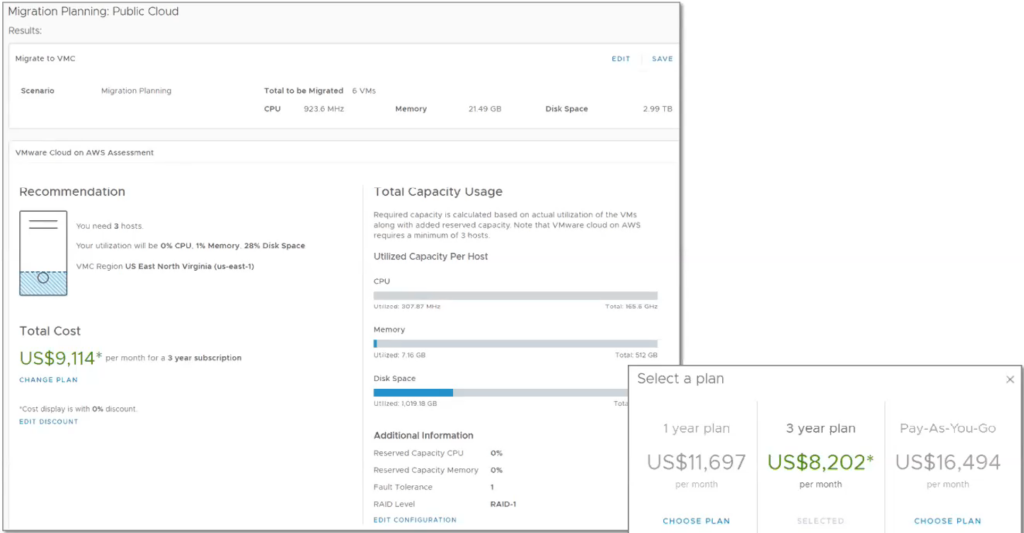

- Improved migration assessments to VMWonAWS.

- Native support for vSphere 7 with K8s on VMonAWS.

- Auto-discovery of K8s constructs and Tanzu control clusters as soon as you implement them.

- vROps 8.1 also expands further into the cloud with an integration with Google Cloud Platform next to the already existing integration with Azure and AWS.

- New and optimized management packs for for example Vxail, AWS, SDDC, DISA and CIS.

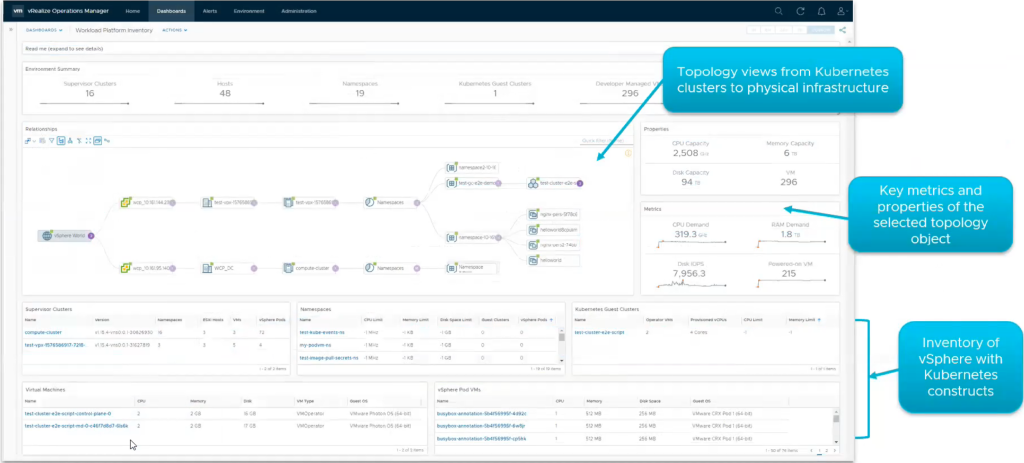

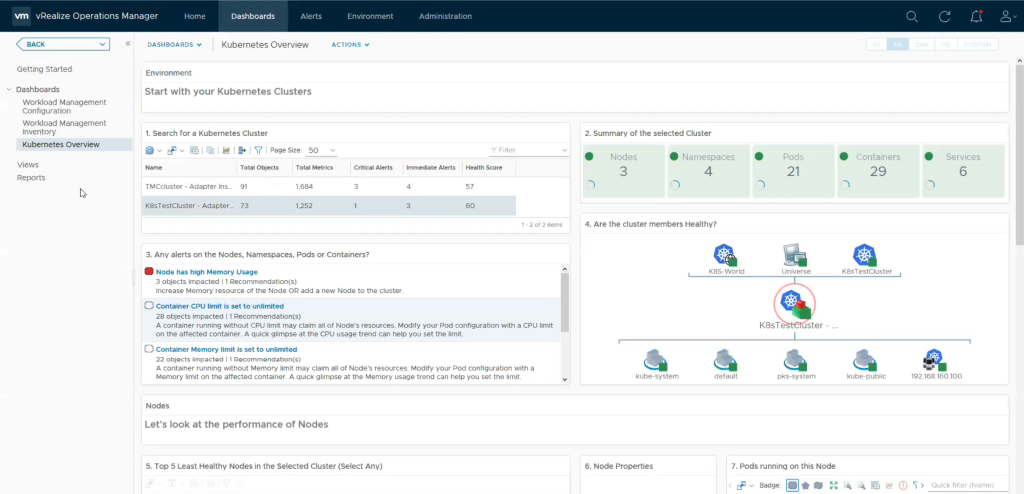

K8s Operations

With the integration of K8s, updates to the self-driving operations capabilities of the SDDC, which include Kubernetes, are most welcome. Let’s have a quick peak here.

It doesn’t stop at the direct integration between vROps and vSphere 7 with K8s. There is also a new container management pack which allows you to have more visibility into K8s and at the same time allows you to monitor multiple K8s clusters.

Multi-cloud monitoring

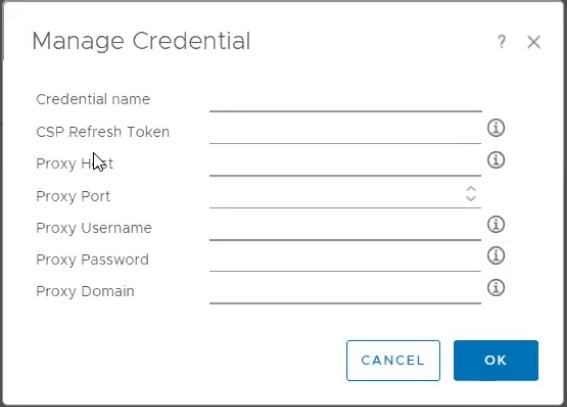

Multi-cloud management and operations are very important. It is easier now to get insights into the biggest cloud providers from vROps. Next to Azure and AWS, VMC is now also directly available from the ‘Cloud Accounts’ menu and integration with VMC has been made very easy:

- A single account to manage multiple VMC SDDCs which include vCenter, vSAN, NSX and VMC Billing.

- To integrate VMC into vROps, all you have to do is to generate a CSP token and appy it into the cloud account workflow to integrate VMC into vROps.

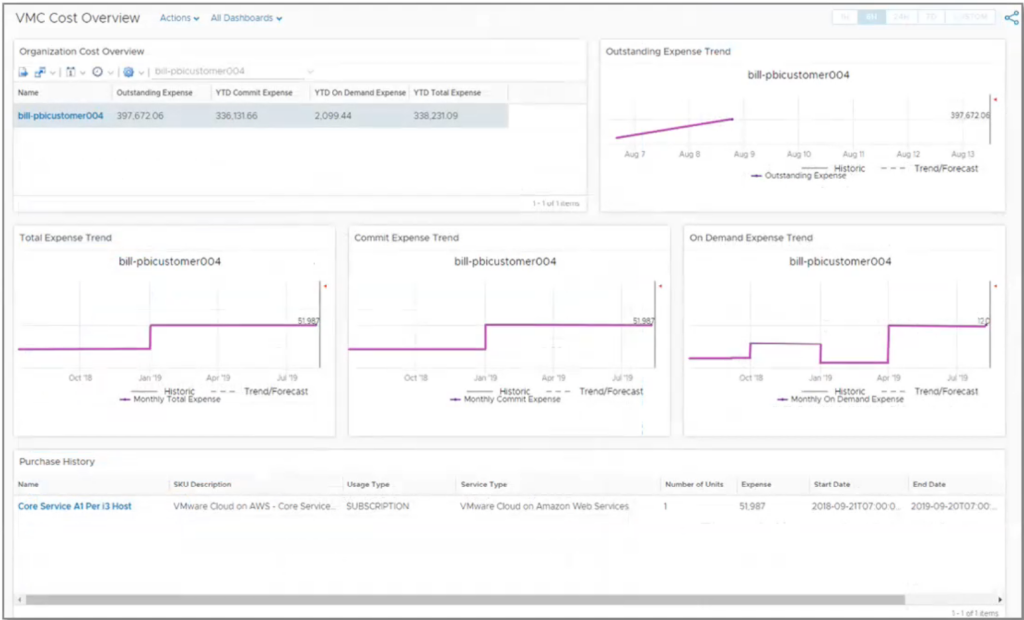

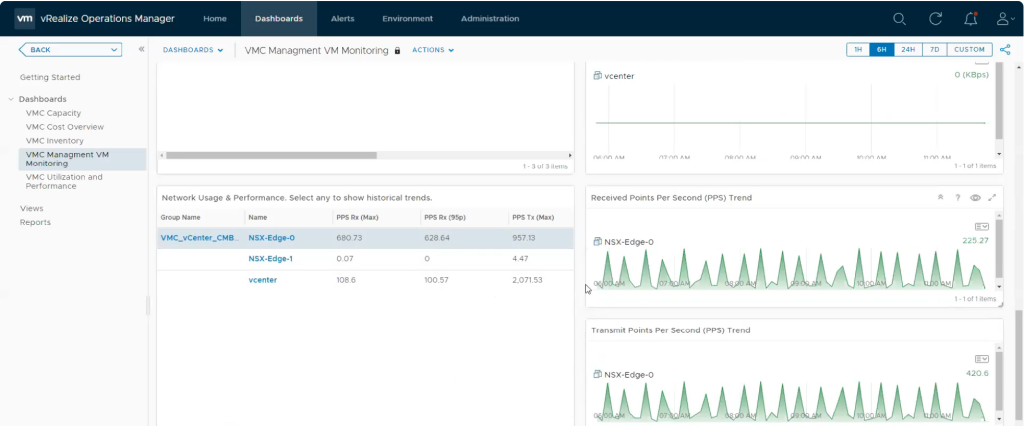

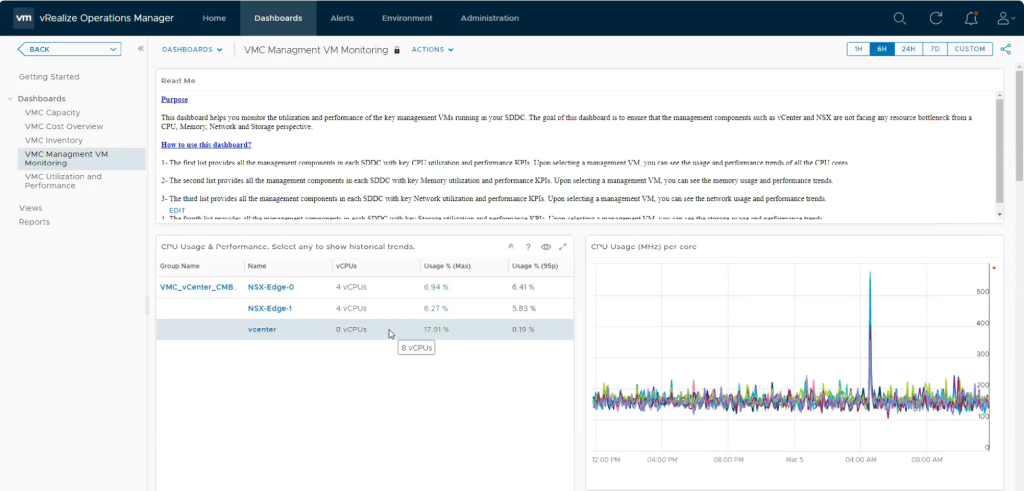

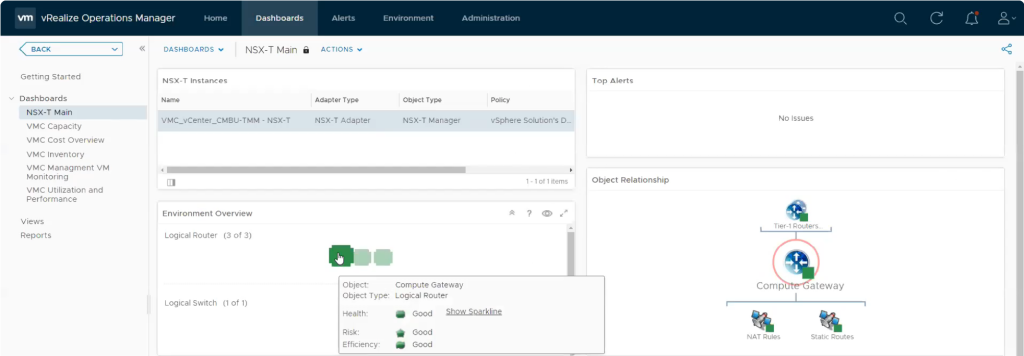

vROps 8.1 also has dashboards which will put an emphasis on critical capabilities and components within VMC, such as a dashboard to monitor the KPI of NSX Routers, to track network usage, to monitor key resources and so on, but also a dashboard to track costs within your VMCs or how about a dashboard to make a migration assessment to the public cloud.

Because the SDDC relies more and more on NSX-T, the capabilities to monitor and operate NSX objects from vROps have been ramped up considerably.

To be honest, there is too much new stuff in Operations, so let me highlight a few exciting new capabilities by showing you some dashboards to stimulate your curiosity. You might also want to have a look over here:

https://blogs.vmware.com/management/2020/03/whats-new-in-vrealize-operations-8-1.html.

Kim

Leave a Reply