Introduction

So in the first part Chris (@UltTransformer) and I (@Dark_KnightUK) played with and broke the NSX-T Manager. We thought it was the Edges turn, so… Now to test Edge failures!

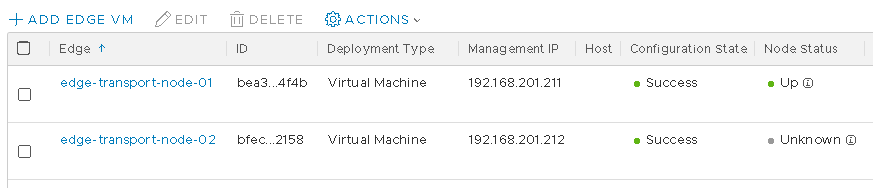

Let’s recap. We have one(1) edge cluster with two(2) edge nodes of medium size. We have a T1 configured that connects to two(2) T0’s (active/active) and both T0’s have”external” access (within the lab).

All is good with the world!

Edge Nodes Explained

This is an interesting concept. Edge Transport Nodes are VMs, the Edge Nodes are containers/VRFs and the SR/DR are services deployed inside these containers/VRFs. At least that’s the way we see it.

You can have up to ten(10) Edge Nodes in a cluster.

Edge Nodes can be physical ESXi hosts as well, KVM is not supported for Edge Nodes currently.

Edge Node Failures

So what happens if we start to cause problems, like losing some of our Edge Nodes.

Let’s power down both Edge nodes and see what happens, POW! Down went all my N/S connectivity, as we’d expect. The VMs could talk to each other, even if they were on different hosts and in different segments on the same T1, but North/South traffic was completely gone. So, we proved the DR (distributed routing) element of the T1/T0 still functions on each of the ESXi hosts, even without the control plane not running.

What would have happened if we only powered down one of our Edge Nodes? Well let’s not stop there, let’s delete it as well!

Power off and delete one(1) edge node from the Edge Node Cluster in vCenter. In this instance we still have the second node running and passing traffic, but there were some outages while our routing protocol BGP recalculates (ping loses in our case). After a few seconds/minutes we are back in business.

Now at this point there is only one(1) working edge node in vCenter and in NSX Manager still lists the now vanished Edge Node with errors. This needs to be resolved.

Deploy a new edge node (you can use the same IP/name or go with something different). It appears NSX Manager does not care about the same IP/FQDN.

*Note if you use the same NAME for the new Edge Node and the faulty node is powered off in vCenter but not removed, you will get an error as that VM name already exists in the vCenter*

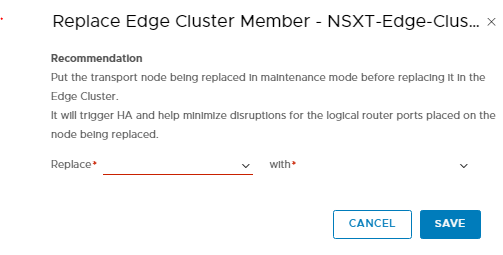

Now in the Edge Clusters you can do a swap out, this is when you pick the new Edge node that isn’t in a cluster and tell NSX Manager to swap it out for your failed node, as seen below:

Before we swap out the Edge Node, let’s see what’s on it.

Services & CLI

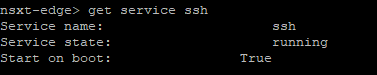

Log on to the new Edge Node via console and start the SSH services using the following method.

- At the command line type: start service ssh

- If you want to make sure SSH starts at boot-up type: set service ssh start-on-boot

- Then type get service ssh to confirm its configured correctly

You should now be able to SSH to your Edge Node. Give it a go!

Once connected, type get logical-routers and since this is a blank Edge Node… it won’t contain any VRFs, but as soon as you do a swap out you will see it lists all your DR and SRs aka VRFs.

Back to Edge Node Failures

Complete the step above and replace your Edge Node in the cluster.

You can now delete the vanished Edge Node from NSX Manager as it doesn’t belong to anything, it will take a bit of time and moan, but it will be deleted. It moans because it can’t see it in vCenter (because we killed it already).

Now we also tried creating a new Edge Node and just adding it to the cluster with the dead Edge Node still there. It added fine, but when you went to the command line it was still empty, nothing recreated. We suspect this is because the T0 active/active VRF’s are being pushed to the original Edge Nodes. Doesn’t seem like we have great distribution of services at this point. Maybe in future releases?

So, make sure you do the swap out method so the services transfer/recreate across, otherwise you will just have an empty edge node doing nothing useful!

Even though the now vanished Edge Node wasn’t around, if you try to manually remove it from the Edge Cluster, it won’t let you as it will throw various dependency errors. That just leaves you with the swap out method.

BUT I hear you ask……..WHAT HAPPENS IF ALL MY EDGE NODES HAVE DIED?!

Well you are going to have a very bad day for sure! Remember that all N/S traffic needs to go via an Edge Node where the SRs reside. So, you should still have E/W routing/traffic as it is done as local as possible, but if you need any of the services or access out you are out of luck.

But this can be recovered quite easily, just stay calm!

Log into your NSX-Manager and do the swap out method and you will have 1 active Edge Node again, and then you can sort out the second too. Just adding a 3rd Edge Node into the cluster as discussed earlier, does nothing.

So now you have your Edge Nodes and N/S traffic flowing again!

There are a million and one ways to break environments, specifically the Edge components and while I’m sure we haven’t cover anywhere near all of the scenarios, we hope this blog post helps a few of you with issues you may run into.

Chris and I will try to get another post out in the next few weeks on some other Edges topics.

Leave a Reply