Introduction

So, I have started getting into the world of NSX-T, I pretty much missed the NSX-V train and while it is still early days in the T world, I wanted to get on board.

This led me to attending the NSX-T v2.4 ICM and I have to say it has been one of the best courses I have ever attended. Our instructor seriously knew his stuff and the room was full of highly skilled networking and virtualisation guys.

I asked lots and lots of questions and we had some very good discussions regarding topologies and packet flows etc.

At the end of the 5th day, me and a couple of guys had some time to burn, and we started discussing component failure scenarios. It is a topic I find very interesting, as I am curious about how things are handled in a failure as failures can happen in many ways.

Now lots of people ASSUME XYZ will happen, then you do ABC and you will be back in business. But if you have NEVER VALIDATED that ASSUMPTION…..it then becomes a RISK!

So @UltTransformer just said “Let’s break the lab and find out!” I have to say I like his style haha.

So I, Chris Noon ( @UltTransformer ) and Ahmed (who works for VMware in Dubai) got busy!

NSX-T Manager Failures

So, when it comes to the NSX-T Manager, your initial deployment is one (1) node, when it is left on its own it allows full read/write. The recommended is three (3) for Production and the max you can have is three (3)!

Single (1) node NSX-T Manager Deployment = R/W

Three (3) node NSX-T Manager Deployment = R/W

Now when you have a three (3) NSX-T Manager node deployment and you lose one (1) node, you still have full R/W access. But if you lose another node and go down to having just one (1) NSX-T Manager node remaining, it will become read only!

So, if you never install more than one (1) node, you know for PoC/Labs etc you are fine, but if you ever deploy more than that and end up going back to one (1) NSX-Manager node, you won’t be able to edit anything.

The procedure listed below is for when you have lost 2 of your 3 Management Nodes and need to make the single remaining node R/W:

The CLI command deactivate cluster is available for situations where a majority of the manager nodes in a cluster are unrecoverable. When this command is run on a manager node, the node assumes that the other nodes are unrecoverable and will form a one-node cluster. After the deactivate cluster command is completed, you should delete the other nodes in the cluster

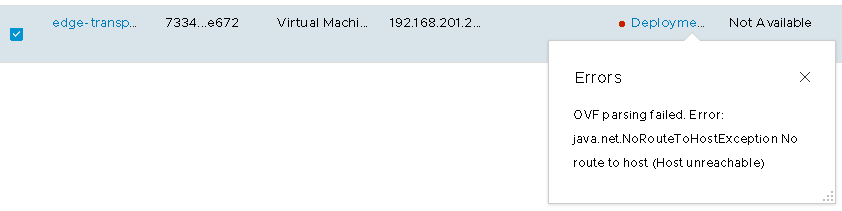

Now one thing we found out that was interesting, when @UltTransformer powered down one (1) of his three (3) NSX-Manager nodes, he was obviously left with two (2) and everything seemed to be working fine. But when he tried to deploy another edge transport node, it kept failing with some OVF error, and when he powered back on the 3rd NSX-Manager, he could deploy the edge node fine.

This only seems to happen if the primary/original manager is the one that is lost. This still failed when using the VIP, so there appears to be some high availability with the NSX-T managers, but it’s not perfect… yet.

So I turned off all my NSX-T Manager Appliance and while the Manager was off there were no issues with E/W or N/S traffic. Everything was pingable just as before. This is what we would expect as the management plane shouldn’t have any impact on the day-to-day running of the data plane.

Interestingly, in an instance where you were to lose all the managers, you are unable to move VMs between hosts. This is likely because of the tracking of the VMs within the segments.

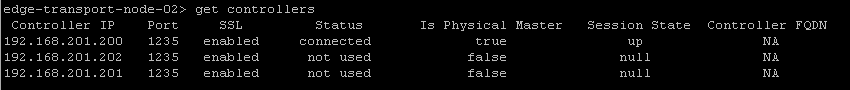

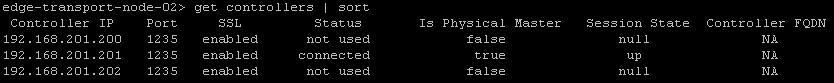

I wondered if this was the same in a three (3) node cluster and if you lost one(1) node? Luckily @UltTransformer has a lab with enough resources to test it out. Losing one(1) NSX manager would force the hosts to start using another Manager/Controller as its “physical master” and VM’s are allowed to move around as expected.

I have been told that if you lose one (1) NSX-Manager node out of a cluster, you should redeploy a new one from NSX-Manager interface and not try any kind of restore. This must be because of the distributed Corfu database and making sure everything is in sync.

This way NSX-Manager knows where it stands at all times!

@UltTransformer and I have seen a lot of blog posts about best practice backup and restore procedures, but not much about the behaviour of the environment during one of these failures. With a million and one creative ways to destroy an environment, we have lots more to blog. But this is a good point for us to end our first blog post and we will work on bringing some more “unique” content around failure scenarios in the coming weeks!

Leave a Reply