So where I am working currently they are a heavy Compellent shop.

Let me give you some of the back story

We have multiple SC8000s spread across 2 sites. These were specced up years ago (before my time), and are now reaching EOL.

One SC8000 was reaching EOL and no one let the company know, which was a big slip up by Dell. Because the original SC8000 was an upgrade from the old White box controllers Comepllent used to use, all the disks and enclosures carried over. But the issue was that these were THAT old, that support was falling off for them, and that meant that a good chunk on the disks on that SAN were now going EOL. The business were only made aware when they went to tender for a new SAN and were warned that one of the SANS was falling out of support in a few months!

Dell after much wrangling ( I thought it would have been pretty simple, Dell made a mistake so offering a warranty extension should have been a given, but the amount of wrangling that went on had me very puzzled) agreed to extend the support a few months, so we could have time to spec up a new SAN. The problem was I encouraged the business to go out to tender to a few SAN vendors as the SAN landscape has and still is changing at a rapid pace.

But because of this failure by Dell, the only real option left was to stay with Compellent. Now that is not really a bad thing by any means, but Dell had managed to keep the business by offering an extension of support while a new Dell Compellent was specced up and commissioned.

Speccing up a new Array

Dell provides you with a web-based tool called Live Optics, which is the old Dell DPACK but made much fancier. You deploy an appliance out into

The old DPACK needed access to every VM that had RDMs attached, which could make things more problematic. But this new version doesn’t need that level of access, just point it to your vCenter and away it goes.

Also don’t get me started about RDMs heh

So after using Dells LiveOptics, we managed to get a Dell Compellent 5020 hybrid array. This array with its SSDs and 10k disks will be in a solid position to not just replace the EOL SC8000 but the second SC8000 onsite too. Just by the massive consolidation ratios, you can achieve these days, 2 full racks are going to be consolidated down to half a rack at most!

The SC5020

When the SAN arrived onsite, I did a full adit of it just to make sure everything had come exactly as specified.

The old SC8000s are basically 2x Dell R720s with 2x 2 port FC cards and 2x 4 port 6Gb SAS cards per controller/server.

The new SC5020 is basically a purpose-built unit, a (dumb) chassis with 2x hot-swappable controllers and 2x hot-swappable power supplies. Each controller has 1x 4 port 16Gb FC card and 2x 12Gb SAS ports.

The business had paid for Dell ProDeploy to send an engineer to site to help commission it. I spoke to the Project Manager assigned to the deployment, and he confirmed that I could rack it and patch it but not power it on. The controllers run through a setup on first boot, and that is fully automated and can take about an hour.

I managed to get hold of the 5020 Deployment Guide that the engineers use, and it seemed like a pretty standard deployment.

I created DNS entries for both controllers and the virtual management IP and the same for the iDRACs. The iDRACs share them same port as the management interface, they do not support VLANs so have to sit in the same L2 segment.

FC Front End Patching

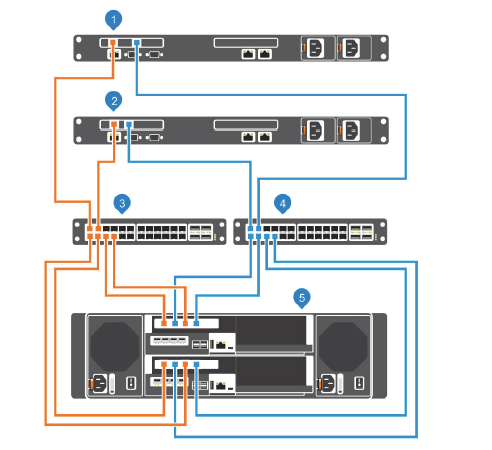

As you can see ports 1 and 3 on each FC card go to Fabric A and ports 2 and 4 on each FC card go to Fabric B. So you end up with two FC domains with ports from both controller in them.

Now the Compellent uses the concept of physical ports and virtual ports for fail-over purposes between controllers. Each physical port gets given a virtual port, so you end up with 8x Physical FC ports and 8x Virtual ports in total.

All hosts are zoned into the virtual ports only. If there is a controller failure the virtual ports flip over to the working controller and the hosts are none the wiser!

If you want to use FC SAN to SAN replication for things such as Live Migrations then you have to zone the 2 Compellents’ together in a specific way:

Live migration will sync the volume to both SANs and then allow you to switch over to the new SAN, all front end mappings and naa.IDs remain the same and the ESXi hosts are unaware. In my testing I did notice a decent performance lost in I/O during the sync, which sorted itself out after the switch over. I am assuming this was to do with the older SAN running 15k/7k disks and simply isn’t as good as the new 5020, so using Live Migrations will have to be done OOH for the business.

SAS 12Gb Back End Patching

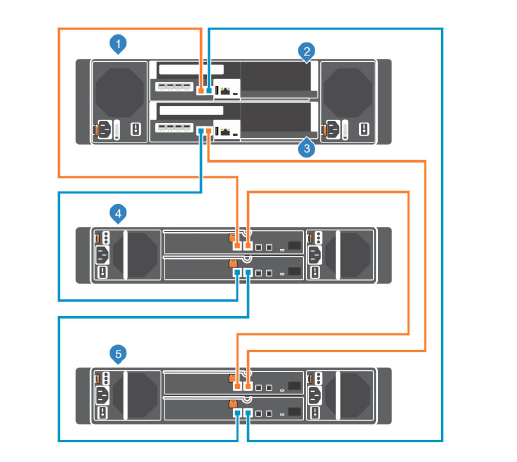

The SC5020 only supports 1 chain with 2 sides per chain, and you patch it up as shown here.

When the Dell Engineer arrived on site, he commented that this is one of the easiest deployments he has had to do! Everything was configured up to spec, all he had to do was label it and turn it on haha

Brocade Zoning

The good and the bad with FC is that when its configured, it just runs and runs and runs. Unless you have an HBA failure or SFP failure (which in my experience are very rare), most people never really touch their fabric again. But when you need to work on it, I have to go

I’ve been in places where since it is a dedicated fabric, people very rarely login to the switches, and they do not even get updated (I mean it is working so just leave it alone right?!) I am joking btw haha

Now I am no expert in zoning on the brocade or by even doing it by the command line. But I can do it via the java web interface! Now the issue is well…..Java and getting it running. I had already anticipated this and upgraded the Brocade switches to the latest supportable version on these Brocades.

Now the upgrade on Brocades is fantastic, the HA setup on each switch means you can run a firmware update and it patches one side of the switch at a time and when one side completes it flips over and does the other side without any downtime to the ports.

I created an FTP server to allow me to pull the running configs off the switches and to patch them. and this can be archived away in case there is an issue in the future.

FC Switch Configs

Backing up configs

admin> configupload

Protocol (scp, ftp, sftp, local) [ftp]: ftp

Server Name or IP Address [host]: FTP IP

User Name [user]: anonymous

Path/Filename [<home dir>/config.txt]: /FCswitchconfig/Configs/switchname01_27_02_2018.txt

Section (all|chassis|switch [all]): all

configUpload complete: All selected config parameters are uploaded

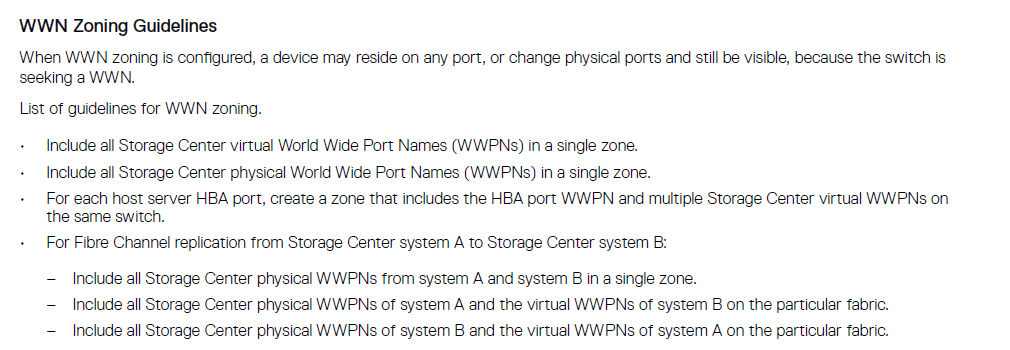

You create an Alias for each physical and virtual port for the SAN in each fabric

You then need to create a Zone for each host which is then linked to the virtual ports in each fabric.

So its Zone Admin > Create Alias > Create Zone > Save Config > Enable Config

So here is how i did it on a switch on each fabric. Remember that when you enable it on one switch it cascades across the whole fabric!

Alias created:

compellent_SNXXXX1_S1_P2_PHSYICAL

compellent_SNXXXX1 _S1_P2_VIRTUAL

compellent_SNXXXX1 _S1_P4_PHYSICAL

compellent_SNXXXX1 _S1_P4_VIRTUAL

compellent_SNXXXX2 _S1_P2_PHYSICAL

compellent_SNXXXX2 _S1_P2_VIRTUAL

compellent_SNXXXX2 _S1_P4_PHYSICAL

compellent_SNXXXX2 _S1_P4_VIRTUAL

Zones created:

Zones created for each host, that contains san101 virtual ports and the host hba

z_HOST001_compellent_NEW

z_HOST002_compellent_NEW

z_HOST003_compellent_NEW

z_HOST004_compellent_NEW

Zones created to allow OLD_SAN/NEW_SAN replication over FC:

z_compellent_NEW_phys_OLD_virtual

z_compellent_NEW_OLD_physical

z_compellent_NEW_phys_OLD_virtual

z_compellent_101_physical

z_compellent_101_virtual

This was done on both fabrics, obviously swap around S1_P2 and S1_P2 for S1_P1 and S1_P3 on the other switch

Fail-over testing

So I went through a barrage of tests, just to make myself feel happy.:

- Live Migration I tested it with a test datastore, it copied it across FC fine. The naa.ID remains the same, you do a rescan on the hosts before you flip it over and as far as I can tell ESXi doesn’t even notice

- The only thing I noticed was my test VMs were pushing about 150MB/sec each but as soon as the sync started they dropped down to about 30MB/sec each, once the sync completed they went back to normal speed and the same after the switchover

- Dells testing basically involves rebooting each controller and that’s it

- I’ve tested iSCSI replication too and it works fine to vdrsan001

- We tested losing one SAS loop at a time

- We unpatched the FC cables from each switch and that was fine. The virtual ports do not move they just vanish and I/O continues on the 2 remaining ports each controller has available

- Pulled out power to one side and then the same on the other side

- Rebooted controllers multiple times

- Shut down each controller and rebooted via idrac (there is no power button on the controllers), if you have no idrac access the only way to power a controller back up after a shutdown is to remove it and re-add it to the chassis

- Dell says pulling the controller out at random is not supported and not part of their testing, even though they are hot swappable? We did it anyway 🙂

I also tested unplugging the FC cables out of the HBA on a single controller to see how it handled fail-over there. It did not go exactly as I had hoped. When you unplug a cable, the virtual port fails over to another port on the same HBA, this ensures connectivity. But as you keep un-patching the virtual ports have nowhere to go on the same controller and just vanish. I was hoping to see the virtual ports fail over to the other controller…but this did not happen. When a controller fail-over this happens perfectly. I logged a call with Dell Support and I was told this was not a valid fail-over test, as the controller will see it as a media disconnect and not fail the virtual ports over. I can kind of see that, but then again any volumes owned by that controller now have no IO and are dead in the water. But I was told that is how it is! *shrugs*

Leave a Reply