For the last couple of days I have been making updates on my version of a cluster sizing tool. Most parts of the table are pretty easy to deduce but today I realized I should probably add a few words of caution. So here are my words of caution:

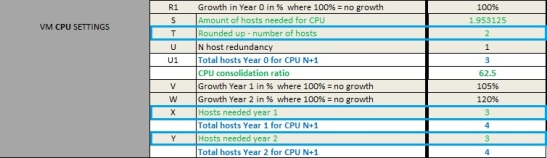

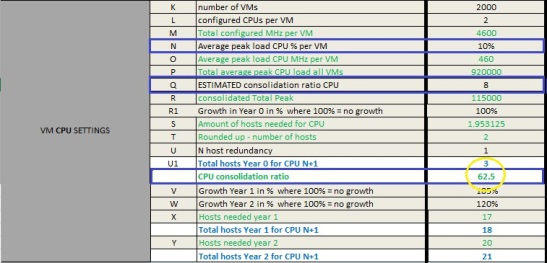

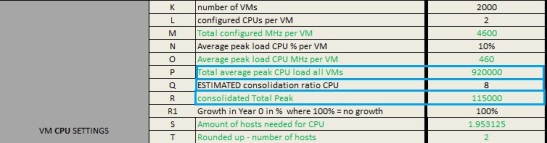

I have used a small trick to calculate the impact of sharing CPUs (vCPU to pCPU ratio). First I calculate the total amount (peak or average) of CPU usage in MHz and I divide that value that potentially can be the vCPU to pCPU ratio. It is not completely exact and if someone has a better idea, please let me know.

The clever thing in this table is that when the PEAK or AVG CPU load (which ever value you decide to use for your calculation, I have only one field for this) go up, the vCPU to pCPU ratio will go down and you will need more hosts. If on the contrary the PEAK or AVG CPU load goes down, the CPU consolidation Ratio line will show you a higher consolidation ratio and the table will show you when you need more or less hosts for the workloads you want to support.

WARNING: OVERALLOCATION

The tool can give the false impression that over-allocating the CPU at absurd levels is possible if you expect that the MAX CPU load of the VM will be very low.

Let me give u an example:

You can safely play around with the average PEAK load CPU % per VM as long as you remember that the advised vCPU to pCPU ratio is 4 to 6 for normal server workloads and up to 8 to 10 for VDI. Keep in mind that not all workloads are equal. Some workloads can be easily shared with others while some other workloads cannot and yes I have seen environments where the vCPU to pCPU ratio is over 10 bt I have also seen some where the ratio is 1 to 2 vCPUs per pCPU.

Personally I leave this setting at 80%. One of these reasons are windows updates which might run simultaneously and push the CPU load on the VM up high, unless you can orchestrate this using SCCM or the likes.

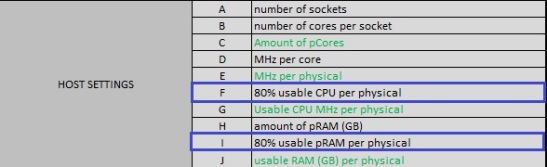

‘80% usable CPU per physical’ and ‘80% usable pRAM per physical’?

Why on earth would you only use 80% of the total capacity of your server’s resources? Bet practices say that 20 % should be kept for running ESXI, handling vMotions and the like. If you have VAAI and the storage handles sVMotion, cloning and so on, you can probably get away with decreasing this number a bit. If your ESXi server handles everything, leave this value at 80%.

ESTIMATED CONSOLIDATION RATIO and CONSOLIDATED TOTAL PEAK

So what is the ‘Estimated Consolidation Ratio’? This is the assumption you make about how many vCPU you will be able to co-schedule on the core without loss of noticeable performance for the users. This value will decide how the ‘Consolidated Total Peak’ is calculated. The ‘Consolidated Total Peak‘ is calculated by dividing the ‘Total average peak CPU load all VM’ by the ‘Estimated Consolidation Ratio’.

GROWTH IN YEAR 0 IN % WHERE 100% = NO GROWTH

Yes 100% is status quo so 120% would mean a 20% increase. So why did I make it like this? Simple, environments do not only increase, sometimes they shrink as well. Setting this value at 80 % would mean a 20% reduction. For example a P2V migration can initially increase your environment but once the implementation has bene completed, usually consolidation follows and the environment will shrink again.

So how do I calculate growth over 3 different years? First I calculate the need in MHz and/or RAM for ‘YEAR 0’ and ‘multiply’ with a ‘percentage growth’. For each next year I do the same calculation based on the previous year’s calculations. Growth is not calculated on the previous year’s number of servers neither on the N+1 number of servers.

Each year’s number of servers are calculated on the calculated number of MHz and RAM for that year.

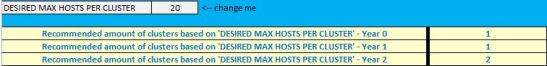

HOW CAN YOU BALANCE YOUR HOSTS OVER CLUSTERS

Change the value for ‘DESIRED MAX HOSTS PER CLUSTER’. Keep a few things in mind:

- vSphere 5.5 has a maximum of 32 hosts per cluster and vSphere 6 has a maximum of 64 hosts per cluster.

- The bigger the cluster, the longer it takes vCenter to calculate DRS.

- If you are using vSphere 5.5 and Year 0 already has 26 hosts, Year 1 has 30 hosts and Year 2 has 35 hosts then maybe it is better to split up the hosts over 2 cluster starting from year 0.

Advised reads:

When to Overcommit vCPU:pCPU for Monster VMs

Josh Odger’s wonderful online cluster calculator

Best practices and advanced features for VMware High Availability