Lay of the land

I have been working with a customer and came across something interesting.

Most people when configuring 2 vCenters don’t share VLANs between the 2, with management networks being the exception. But here we had a VLAN in 2 vCenters which needed to be migrated to our new VCF site. This new site would utilise NSX-v to terminate the default gateway.

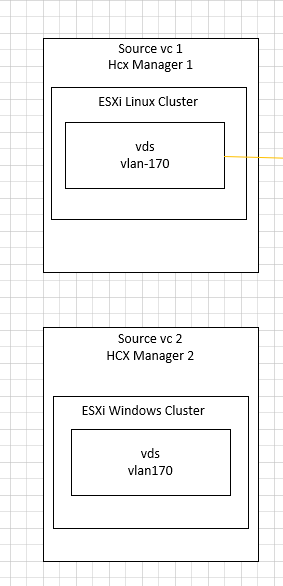

I think this is best shown in a diagram:

For some unknown reason, a decision was made to separate out the Windows and Linux workloads into 2 vCenters but use the same VLAN. Sometimes you can come back and visit a decision you made previously with fresh eyes and new info and realise it wasn’t the best way to go. This is where something like HCX and upgrades/migrations allow you to fix issues!

I recall when I worked for a customer I had the choice to migrate to new vCenters or do an in-place upgrade. Over the years the decisions made earlier by various people in the business… now didn’t really make sense and times had changed. So, I put my case forward for just leaving as many of the previous bad decisions behind and doing it fresh some things would have to carry forward……but a lot less than doing an in-place upgrade!

The Greener Grass

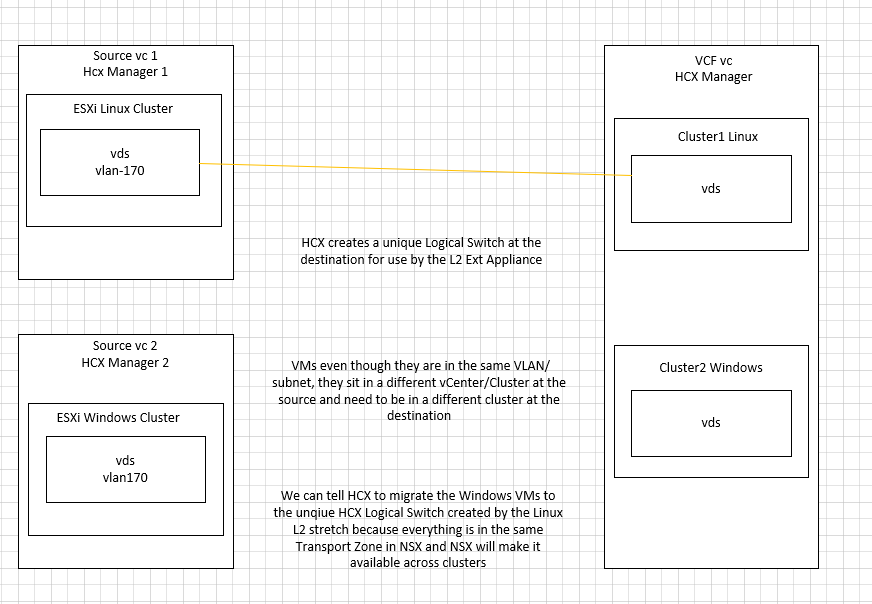

Now the destination is a VCF deployment, the destination has multiple clusters and each cluster covers a specific workload. So Windows VMs and Linux VMs have their own separate compute and storage resources and they are managed by NSX-v for networking.

Now because the decision was made years ago to put them in the same subnet, it was decided that little could be done about it now. Re-IP and all that jazz wasn’t worth it for the legacy apps, so it was best to move them into VCF as is. Moving forward with the new VCF deployment and every cluster having its own VDS this issue will be a thing of the past.

Now I spent some time with Chris Noon working out the correct process as he had come across this kind of thing before in his job at a VMware Partner. I then took the below process back to the business and their networking team and they confirmed that this would work exactly as they wanted.

Since the workloads will reside within the same vCenter, managed by the same NSX-v Manager I can make this work.

Even though each destination VCF cluster has its own VDS, all the hosts are in the same NSX-v transport zone.

HCX and Networking

HCX when it extends a network, patches into the VDS at source and destination. Even though it will be patched into one destination cluster, since all the hosts are in the same transport zone, the logical switch created by HCX in NSX-v for the stretch can be made available to every host in the cluster when it is needed!

Let’s add to the first diagram:

So we extend from the Linux cluster VDS at source into the Linux cluster VDS at the destination.

But since the destination hosts are all in the same NSX Transport zone, the VMs migrating into the Windows cluster can still use the layer 2 VNI perfectly fine. It does mean there is an additional hop for the Windows VMs to trombone back while the stretch is open. Since the L2 appliance is in the Linux cluster at the destination the windows VMs will have to send traffic over there and then back across the stretch to the legacy source.

But now when we kill the stretch and tell HCX to migrate the gateway into NSX, both sets of VMs in that VNI, even though they reside in different clusters can use their gateway in NSX-v and be happy.

Any questions please reach out to me @Dark_KnightUK or Chris Noon (@UltTransformer) on Twitter!

Hello Bilal,

Good day.

Just wanted to get some clarity in the scenario explained so kindly confirm on the below points:

– In order to migrate windows workloads from source VC2 to VCF VC since the VC and HCX are different. Wouldn’t we will first need to have a new compute profile on VCF HCX, new compute profile on source HCX(VC2) , new service mesh probably with no Network extension appliance?

– If a ARP request is made from one of the destination windows server for one of the source windows VM, would the traffic path be like [ Dest Windows VM > Linux Dest NE > Linux Source NE (VC1) > Source Windows VM (via external switch) ]

Hope to get your kind reply.

Thanks.

Hi Amit,

Sorry for the delayed response!

So if you are using Bulk Migration, as long as the IX appliance can reach the ESXi Mgmt vmk then it’s fine. Most customers put HCX/IX in the same network as vCenter and this gives it access to the ESXi hosts easily enough. This works perfectly fine for bulk migration, but if you want to use HCX vMotion, you would most likely need different service meshes, since the vMotion network is usually isolated and different per cluster. IF it was the same vMotion network across all the clusters, then you could go with one service mesh as it would have the access it needed. Also, think about the vMotion limitation of 1 at a time PER Service Mesh, so if you wanted faster vMotions across multiple clusters, doing a service mesh per cluster one allow for more vMotion migrations too.

That traffic flow you mention looks correct to myself and Chris

Hello Bilal,

Thank you for your kind response and extremely sorry for the delay in acknowledging it. I somehow overlooked it, my bad.

Thanks for all the insights and I totally agree on vMotion requirements and constraints.

However I am unclear on one of the point you mentioned in your reply wrt to bulk migration.

Even if we are doing bulk migration, isn’t the IX’s reach limited to the VMs within the same VC? If the workloads (windows vms in our scenario) spans to a different VC, wouldn’t a new site pairing, new compute profile and hence a new IX needed between site source VC2 and destination VCF site since HCX and VC are in 1:1 relationship?

Thanks once again for taking time out to reply.

Stay safe and healthy!

Thanks,

Amit

Hey Amit,

Sorry for the delayed response, yep it’s all on a per VC basis. HCX has a 1:1 mapping to a vCenter. So in my diagram, I have 2 source HCX Managers paired o a single destination HCX Manager.

So when I talk about the IX needing access to the vmks of the hosts, that assumes they are in the same vCenter, otherwise it won’t work

Thanks for the clarifications and your time.

It helps a lot.

Stay safe and healthy.